Image recognition is one of the most important and exciting aspects of neural network technology. This project attempts to build an facial expression classifier engine, using a known image dataset, the 10k US Adult Faces Database.

Some the images in the original dataset.

The task was to use Convolutional Neural Network (CNN) analysis to classify the images by sex and by facial expression, using Keras with a TensorFlow back end. It was known from earlier studies that the VGG16 model had shown itself to be particularly effective at image recognition. Thus the original gameplan was to train an image classifier on these 2,222 datapoints. The problem with this approach was that, with regard to facial expression, there was a significant class imbalance in favor of classes 0 (neutral) and 1 (happy).

The first remedy to this was to write a basic web scraper using Beautiful Soup, as will be shown later, in order to increase the numbers of the under-represented expression classes. Once the numbers of these classes had been increased, attempts were made to classify the images. Results were mixed, for either or both of two possible reasons: the disparate nature of the images scraped.Possibly inaccurate classifications by the survey respondents.

As such, the decision was made to trawl through the remaining 7,946 images that had not been pre-classified, and to find class exemplars among those to represent the under-balanced classes. Two of the classes, 'scared', and 'disgusted,' were dropped due to a dearth of available exemplars within the database. This resulted in a dataset of the following numbers, upon which the analysis began:

This set of classes evolved throughout the course of the project, and some classes were later dropped, as will be explained later.

What would be the possible use case for a Facial Expression Classifier?

Although this type of technology has a wide variety of potential applications, many of which have to do with security, this study is aimed at workplace wellness. This work could serve as the precursor to a Human Resources app that would, for example, poll employees periodically on their current mood. The work conducted in this study, if successful, could be used as visual support or verification of employees' typed or spoken responses to this polling. This could be achieved via an iPad or a phone app that would take a snapshot of the subject's face after the person's name or employee ID has been entered into the system.

With a sufficiently high face-expression-recognition accuracy, the algorithm could perhaps be aware when a subject is under personal stressors that even he/she may not be aware of. The effectiveness, and therefore the commercial viability, of this application will of course depend on its predictive accuracy. All tweaks and modifications to the models developed will be made with the goal of maximizing this accuracy.

The VGG16 model was 90% accurate in identifying people's sex based on an image of their face.

As a control on a less challenging task, the decision was made to do an initial classification using sex as the distinguishing criterion. Note that this was done on images obtained from the original dataset, excluding any scraped images.

The model achieved an impressive 90% accuracy in distinguishing between male and female faces. The visualized confusion matrix shows that the model is slightly more accurate in identifying male faces than female. In all, the results from this part of the analysis showed that the VGG16 model was properly configured and was responsive to the characteristics of the dataset. Having established this, attention could now be turned to the more challenging problem of identifying facial expressions.

Having established that the data pipeline was structured correctly and that the model was pulling and identifying images appropriately, attention was turned to the considerably more subjective and difficult task of emotion recognition. Here the images were separated into five classes: neutral, happy, sad, angry, and surprised. In all cases, data augmentation was used because the dataset was even smaller this time than previously. The training, test and validation file paths were modified to point to different pre-populated folders.

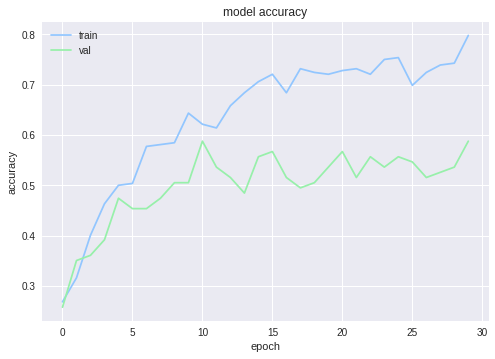

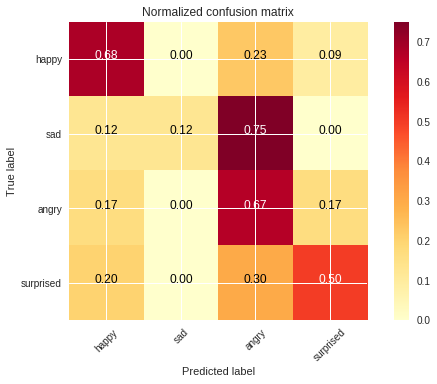

After trying numerous model configurations, the best accuracy obtained in facial-expression classification, with 4 classes was 56.9%. The full confusion matrix for precision and recall determination is shown below in graphical form. You will note that the 'neutral' class was removed, mainly because the model did not seem to distinguish it well from the other classes.

Other combinations were attempted, such as a head-to-head between the best performing classes (happy and sad). All of those are shown in the notebook linked at the bottom of this article. What we see below, though, are the results for the VGG16 model applied to a 4-outcome-class dataset:

In this project, a considerable amount was learned about how to train a Convolutional Neural Network to recognize facial expressions, and thereby infer subject mood. This serves as an effective, well-functioning platform for the wellness app that was conceptualized earlier. In further study, I would like to explore the use of other pre-trained models to improve accuracy.

Perhaps the accuracy would be better if we were to re-train the model on more extreme exemplars of each facial expression, i.e. use only the brightest smiles or the angriest faces, to see how more extreme expressions may help the model to learn more effectively. The overfitting was another issue that was never resolved, and manifested itself on all model runs except the first one, the sex classification problem.

The full notebook for this project can be found here.